Adaptive multimodal interaction in autonomous cars

Adaptive Multimodal interaction allows autonomous cars to effectively communicate with drivers, ensuring safety, minimizing distractions. Adaptability is a key capability to ensure the driver’s performance, to enhance driver’s experience and to improve trust in the autonomous system. It is achieved by integrating various interaction modalities. However, interfaces and interaction may vary depending on driving context like environmental conditions (road traffic, weather, etc.), driver's state (cognitive and physical), variety of population (young and old drivers) and other situational factors. There is still work needed to evaluate all the combinations of sensory modalities and their dynamic adaptation to both driver state and driving situation. Such study is being carried out in the AWARE2ALL project to shed light on the impact of multimodality and adaptability HMI on user experience.

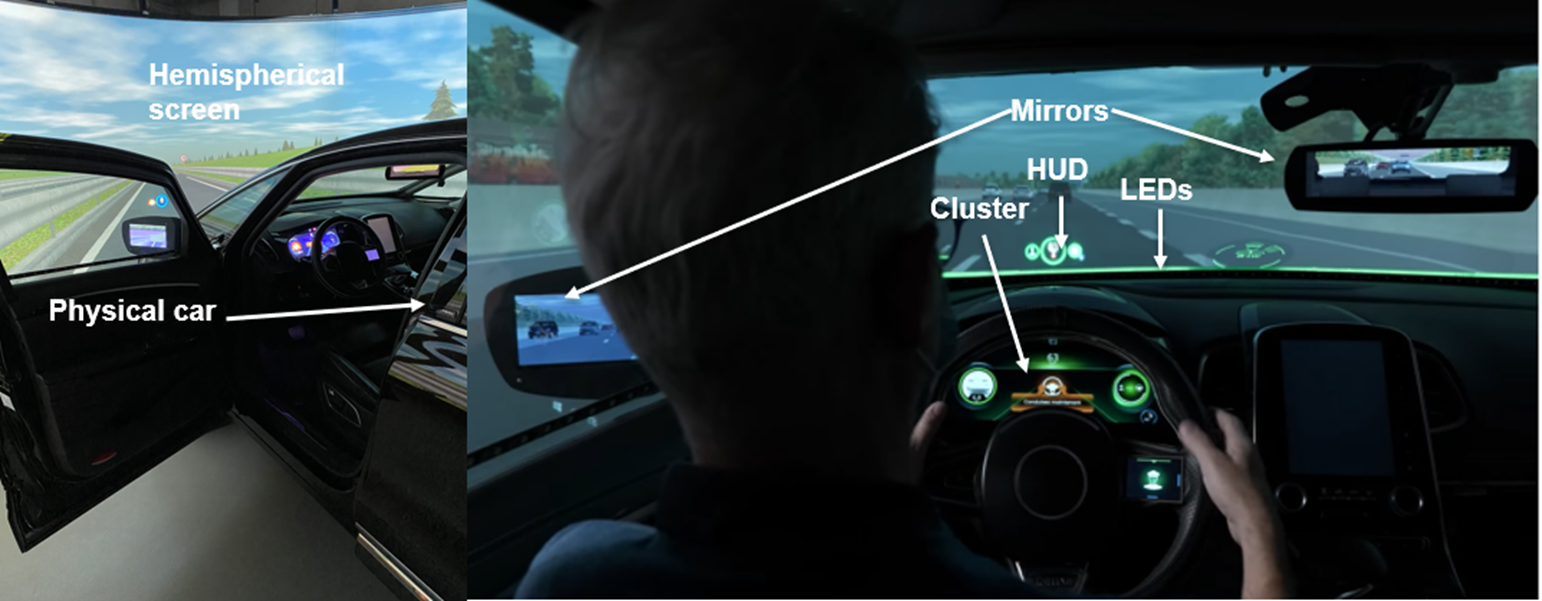

Within Aware2All, IRT SystemX provides the project with an immersive driving simulation platform. It is composed of a 180° panoramic display providing a realistic environment visualization in front of a real car cockpit. Inside the cockpit, different screens are synchronized with the simulation in real time (cluster, central display, HUD, and mirrors). It is also equipped with LEDs (side windows, windshield) and speakers (cockpit + headrest) to provide both visual and auditory feedback to occupants inside the cockpit. For instance, the use of spatialized sounds and LEDs around the cockpit creates a sort of in-car safety cocoon for the driver and the other occupants. All modalities are synchronized in real time to create animations that convey smoothly information to the driver. For instance, the autonomous car can detect another vehicle overtaking and track its position on the side of the car using an animation of both LEDs and sound. For confident drivers, a windshield LEDs are used to indicate to the driver that AD is activated as opposed to less confident drivers for whom the system presence is concentrated on the steering wheel (which is the “hands” of the vehicle handling lateral control). New actuators and sensors will be installed by different partners in the project to enhance the demonstrator and drivers experience. For instance, haptic feedback in the driver’s seat will be provided by CEA and haptic feedback in the steering wheel will be integrated by TNO.

The combination of different sensory modalities improves the way autonomous vehicles inform and warn the driver during critical situations such takeover requests (TOR) and shortly after the switch from Autonomous Driving (AD) to Manual Driving (MD). To ensure safety during the takeover request and to promote driver situational awareness, HMIs adaptation will rely on sensors provided by different partners of the project. Driver state will be assessed using metrics provided by multi-sensor OMS regarding occupant’s health, real time workload estimations based on multi-sourced physiological data, and indicators such as Driver ID, Driver Distraction, Hands-On-Steering wheel, and Situational Awareness level. A large user testing campaign will be held to gather results on adaptive multimodal interaction performances according to Aware2All scenarios including various environmental conditions (weather, traffic, etc.) and involving different driver profiles based on age, physical disabilities (spinal cord injurie, loss upper limb, etc.) or sensory disabilities (deafness, autism spectrum, etc.).

Do you want more information? Please contact our experts:

Kahina Amokrane-Ferka kahina.amokrane-ferka@irt-systemx.fr